AI is everywhere right now. Some of it is exciting—new ways to save time, new tools for doing good. But let’s be honest: it also feels a little scary. Because if you can use AI to make your work easier, cybercriminals can use it to make their scams more convincing.

That doesn’t mean you need to lose sleep over it. It just means knowing where the real risks are—and how to keep your mission safe.

The “Fake Face” in Your Zoom Call

Imagine logging into a video meeting and seeing your executive director… only it isn’t really them. Cybercriminals are now using AI deepfakes to impersonate trusted leaders. Their goal? Trick your staff into clicking the wrong link or downloading something dangerous.

👉 What to do: Remind your team it’s okay to pause. If something feels off, double-check by phone or another channel before acting. A two-minute call could save you a massive headache.

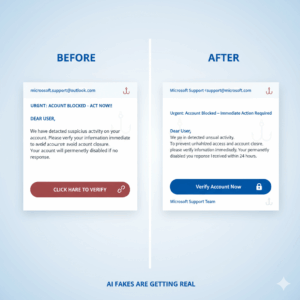

Emails That Look a Little Too Real

Phishing emails used to be easy to spot—bad spelling, clunky grammar, strange formatting. Not anymore. With AI, those scams now look polished, professional, and believable.

👉 What to do: Multi-factor authentication (MFA) is still your best defense. Even if someone clicks, MFA stops attackers from walking right in. And keep staff training simple but consistent—help people spot red flags like urgency, strange requests, or unusual attachments.

“Helpful” AI Tools That Aren’t What They Seem

Cybercriminals love shiny new trends. Right now, that means building fake AI tools loaded with malware. They look legit, but they’re just waiting to infect your systems.

👉 What to do: Before trying out new AI software, run it by us. We’ll check if it’s safe so you don’t have to worry.

Why This Matters for You

You didn’t start your non-profit to worry about phishing emails or deepfakes. But when tech fails—or worse, when you get hit by a cyberattack—it pulls time, energy, and resources away from your mission. That’s the real cost of ignoring these risks.

The good news? You don’t need to carry that weight alone. With the right defenses—MFA, simple staff training, and a trusted IT partner—AI doesn’t have to be scary.

Let’s Clear Out the Ghosts

Your mission is too important to get sidetracked by cybercriminals. Let’s make sure the only thing AI does for your organization is help, not harm.

👉 Want AI tools that work for you instead of against you? Sign up for our Campaign writing webinar at https://www.humanitcompany.ca/ai-assistant-webinar/

“Phishing emails used to be easy to spot—bad spelling, clunky grammar, strange formatting. Not anymore.”